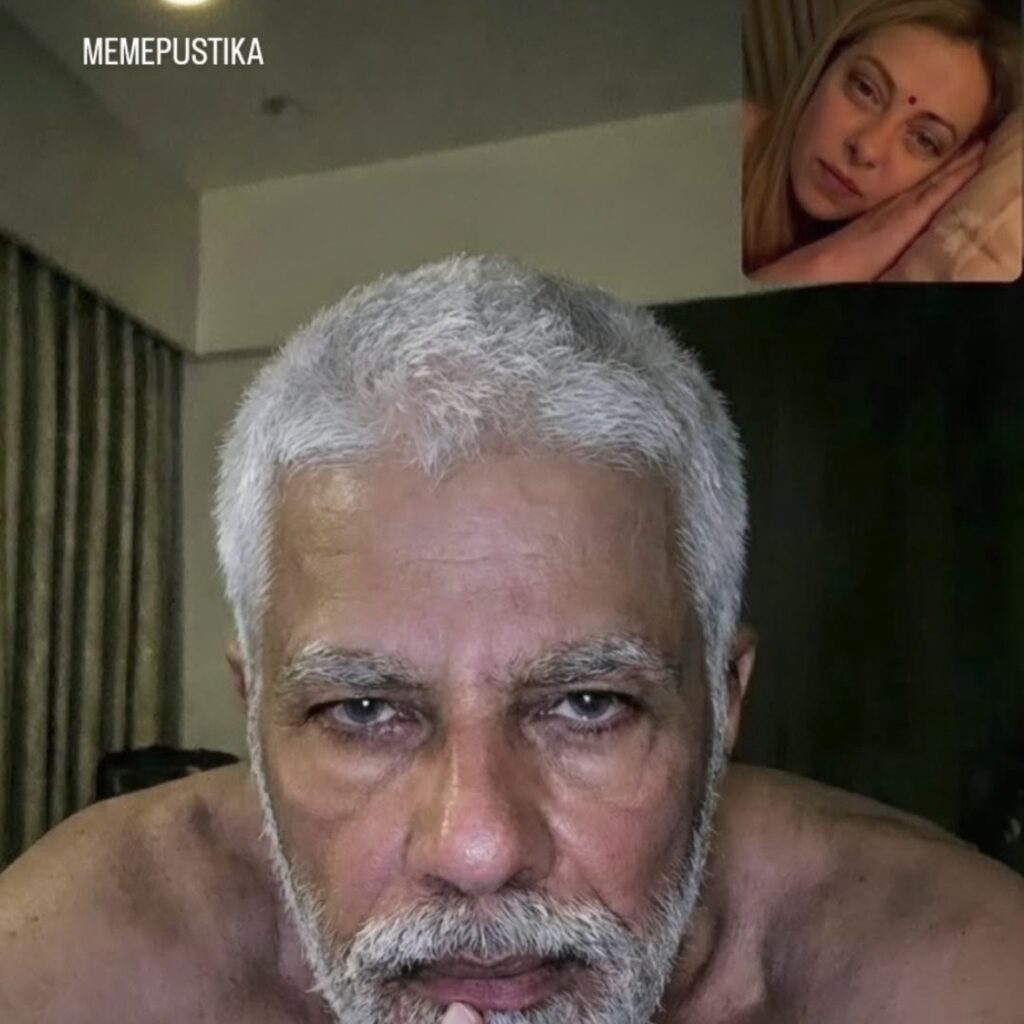

Artificial Intelligence (AI) has rapidly advanced over the past decade, transforming industries from healthcare to entertainment. Yet as powerful as these innovations are, they also bring unforeseen risks — especially when it comes to video creation. AI-generated videos, including deepfakes and synthetic media, are becoming alarmingly realistic. This has stirred debate about how “dangerous” AI really is when the average person can no longer discern what’s real from what’s fabricated.

1. The Rise of AI Video Generation

Today’s AI models — powered by generative adversarial networks (GANs), large language models, and diffusion techniques — can produce videos that mimic human faces, voices, and behaviors with astonishing accuracy. What once required expert-level skills and high-end computing is now available through user-friendly tools online. This means anyone, regardless of technical background, can create convincingly fake videos in minutes.

But this convenience comes with consequences.

2. Why People Struggle to Differentiate

There are two major reasons why AI videos are so hard to spot:

● Realism at an unprecedented scale

Modern AI tools replicate nuances like facial expressions, lip-syncing, and lighting effects down to minute details humans usually rely on for authenticity. When audio and video perfectly align in real-time, our brain accepts the video as “real” — even if it’s entirely synthetic.

● Psychological and cognitive limitations

Humans are not naturally equipped to detect manipulated media, especially when the content aligns with their expectations or biases. In high-stakes situations — such as political videos, celebrity speeches, or news footage — people often assume authenticity unless proven otherwise.

3. Risks to Trust and Society

When people can’t tell real from fake, it erodes trust across multiple sectors:

Disinformation & Politics: Deepfake videos have already been used to spread false narratives, influence elections, and damage reputations. A convincingly fake video of a politician making controversial remarks can spark outrage before it’s ever fact-checked.

Legal implications: Synthetic media complicates law enforcement and legal proceedings. Video evidence — once considered among the strongest forms of proof — can now be fabricated, calling into question its admissibility in courts.

Personal harm: Individuals can be targeted with fake videos that defame, shame, or manipulate public opinion. For example, fake videos portraying private individuals in compromising situations can lead to emotional trauma and social backlash.

4. What Technology Companies Are Doing

Recognizing the growing threat, tech companies and researchers are building tools to fight back:

Detection tools: AI-based detectors aim to flag manipulated video content by analyzing inconsistencies at the pixel or neural level.

Digital watermarks: Some platforms embed watermarks into authentic content to allow verification later.

Regulations & policies: Governments and social media platforms are beginning to enact policies that require disclosures for AI-generated content — though global implementation varies widely.

However, these solutions are playing catch-up as generative AI continues to evolve at a rapid pace.

5. What We Can Do as Individuals

Although the technology is advancing fast, there are practical steps everyday users can take:

Check multiple sources: If a video seems sensational, cross-reference it with trusted media outlets.

Understand context: Videos without credible sourcing or published on unfamiliar channels should be treated cautiously.

Know the signs: While detection is harder than ever, look for odd lighting, unnatural speech patterns, or mismatched audio — subtle clues that might reveal fabrication.

Conclusion

AI’s ability to generate ultra-realistic videos represents both innovation and risk. While the technology holds enormous potential — from film production to education — its misuse threatens personal reputations, democratic systems, and societal trust. As AI continues to become more powerful, society must adapt through stronger detection tools, clearer regulations, and better public awareness.

The real danger isn’t just that AI can create fake videos — it’s that people are no longer certain what’s real. And when reality becomes uncertain, truth itself is at stake.